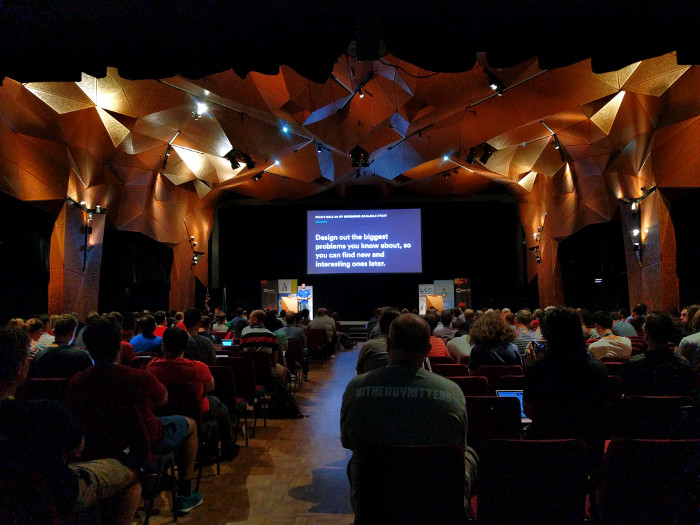

The annual Open Source Monitoring Conference (OSMC) held in Nürnberg, Germany each year brings together pretty much everyone who is anyone in the free and open source monitoring space. I really look forward to attending, and so do a number of other people at OpenNMS, but this year I won the privilege, so go me.

The conference is a lot of fun, which must be the reason for the hell trip to get here this year. Karma must be trying to bring things into balance.

As an American Airlines frequent flier whose home airport is RDU, most of my trips to Europe involve Heathrow airport (American has a direct flight from RDU to LHR that I’ve taken more times than I can count).

I hate that airport with the core of my being, and try to avoid it whenever possible. While I could have taken a flight from LHR directly to Nürnberg on British Airways, I decided to fly to Philadelphia and take a direct American flight to Munich. It is just about two hours by train from MUC to Nürnberg Hbf and I like trains, so combine that with getting to skip LHR and it is a win/win.

But it was not to be.

I got to the airport and watched as my flight to PHL got delayed further and further. Chris, at the Admiral’s Club desk, was able to re-route me, but that meant a flight through Heathrow (sigh). Also, the Heathrow flight left five hours later than my flight to Philadelphia, and I ended up waiting it out at the airport (Andrea had dropped me off and I didn’t want to ask her to drive all the way back to get me just for a couple of hours).

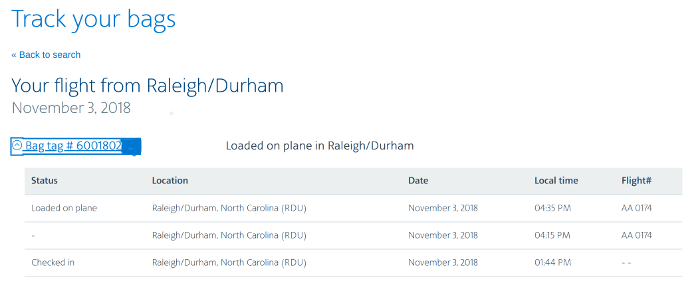

Because of the length of this trip I had to check a bag, and I had a lot of trepidation that my bag would not be re-routed properly. Chris even mentioned that American had actually put it on the Philadelphia flight but he had managed to get it removed and put on the England flight, and American’s website showed it loaded on the plane.

That also turns out to be the last record American has on my bag, at least on the website I can access.

The fight to London was uneventful. American planes tend to land at Terminal 3 and most other British Airways planes take off from Terminal 5, so you have to make your way down a series a long corridors and take a bus to the other terminal. Then you have to go through security, which is usually when my problems begin.

I wear contact lenses, and since my eyes tend to react negatively to the preservatives found in saline solution I use a special, preservative-free brand of saline. Unfortunately, it is only available in 118ml bottles. As most frequent fliers know, the limit for the size of liquid containers for carry on baggage is 100ml, although the security people rarely notice the difference. When they do I usually just explain that I need it for my eyes and I’m allowed to bring it with me. That is, everywhere except Heathrow airport. Due to the preservative-free nature of the saline I can’t move it to another container for fear of contamination.

Back in 2011 was the first time that my saline was ever confiscated at Heathrow. Since then I’ve carried a doctor’s note stating that it is “medically necessary” but once even then I had it confiscated a few years later at LHR because the screener didn’t like the fact that my note was almost a year old. That said, many times have I gone through that airport with no one noticing the slightly larger size of my saline bottle, but on this trip it was not to be.

When your carry on items get tagged for screening at Heathrow’s Terminal 5, you kind of wait in a little mob of people for the one person to methodically go through your stuff. Since I had several hours between flights it was no big deal for me, but it is still very annoying. Of course when the screener got to my items he was all excited that he had stopped the terrorist plot of the century by discovering my saline bottle was 18ml over the limit, and he truly seemed disappointed when I produced my doctor’s note, freshly updated as of August of this year.

Screeners at Heathrow are not imbued with much decision making ability, so he literally had to take my note and bottle to a supervisor to get it approved. I was then allowed to take it with me, but I couldn’t help thinking that the terrorists had won.

The rest of my stay at the world’s worst airport was without incident, and I squeezed into my window seat on the completely full A319 to head to Munich.

One we landed I breezed through immigration (Germans run their airports a bit more efficiently than the British) and waited for my bag. And waited. And waited.

When I realized it wouldn’t be arriving with me, I went to look for a BA representative. The sign said to find them at the “Lost and Found” kiosk, but the only two kiosks in the rather small baggage area were not staffed. I eventually left the baggage area and made my way to the main BA desk, where I managed to meet Norbert. After another 15 minutes or so, Norbert brought me a form to fill out and promised that I would receive an e-mail and a text message with a “file number” to track the status of my bag.

I then found the S-Bahn train which would take me to the Munich Hauptbahnhof where I would get my next train to Nürnberg.

I had made a reservation for the train to insure I had a seat, but of course that was on the 09:55 train which I would have taken had I been on the PHL flight. I changed that to a 15:00 train when I was rerouted, and apparently one change is all you get with Deutsche Bahn, but Ronny had suggested I buy a “flexpreis” ticket so I could take any train from Munich to Nürnberg that I wanted. I saw there were a number of “Inter-City Express (ICE)” trains available, so I figured I would just hop on the first one I found.

When I got to the station I saw that a train was leaving from Platform (Gleis) 20 at 15:28. It was now 15:30 so I ran and boarded just before it pulled out of the station.

It was the wrong train.

Well, not exactly. There are a number of types of trains you can take. The fastest are the ICE trains that run non-stop between major cities, but there are also “Inter-City (IC)” trains that make more stops. I had managed to get on a “Regional Bahn (RB)” train which makes many, many stops, turning my one hour trip into three.

(sigh)

The man who took my ticket was sympathetic, and told me to get off at Ingolstadt and switch to an ICE train. I was chatting on Mattermost with Ronny most of this time, and he was able to verify the proper train and platform I needed to take. That train was packed, but I ended up sitting with some lovely people who didn’t mind chatting with me in English (I so love visiting Germany for this reason).

So, about seven hours later than I had planned I arrived at my hotel, still sans luggage. After getting something to eat I started the long process of trying to locate my bag.

I started on Twitter. Both the people at American and British Airways asked me to DM them. The AA folks said I needed to talk with the BA folks and the BA folks still have yet to reply to me. Seriously BA, don’t reach out to me if you don’t plan to do anything. It sets up expectations you apparently can’t meet.

Speaking of not doing anything, my main issue was that I need a “file reference” in order to track my lost bag, but despite Norbert’s promise I never received a text or e-mail with that information. I ended up calling American, and the woman there was able to tell me that she showed the bag was in the hands of BA at LHR. That was at least a start, so she transferred me to BA customer support, who in turn transferred me to BA delayed baggage, who told me I needed to contact American.

(sigh)

As calmly as I could, I reiterated that I started there, and then the BA agent suggested I visit a particular website and complete a form (similar to the one I did for Norbert I assume) to get my “file reference”. After making sure I had the right URL I ended the call and started the process.

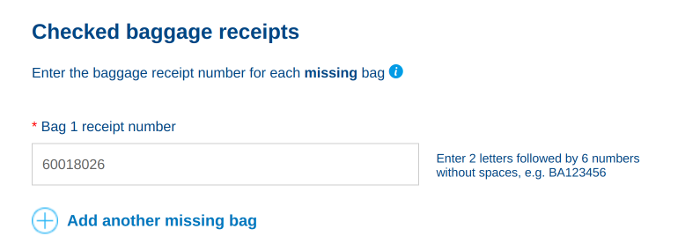

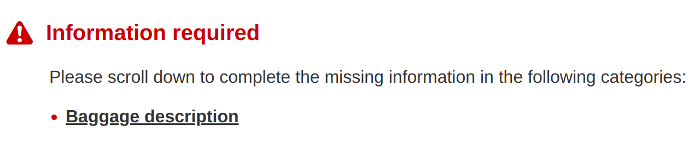

I hit the first snag when trying to enter in my tag number. As you can see from the screenshot above, my tag number starts with “600” and is ten digits long. The website expected a tag number that started with “BA” followed by six digits, so my AA tag was not going to work.

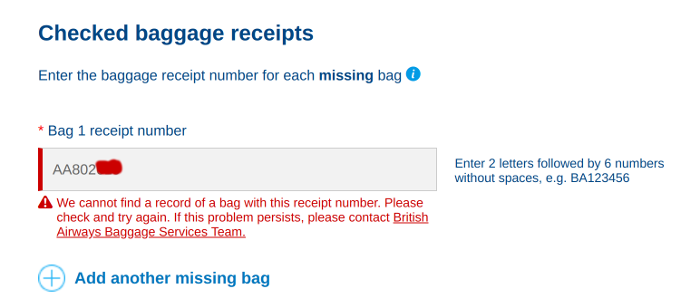

But at least this website had a different number to call, so I called it and explained my situation once again. This agent told me that I should have a different tag number, and after looking around my ticket I did find one in the format they were after, except starting with “AA” instead of “BA”. Of course, when I entered that in I got an error.

After I explained that to the agent I remained on the phone for about 30 minutes until he was able to, finally, give me a file reference number. At this point I was very tired, so I wrote it down and figured I would call it a night and go to sleep.

But I couldn’t sleep, so I tried to enter that number into the BA delayed bag website. It said it was invalid.

(sigh)

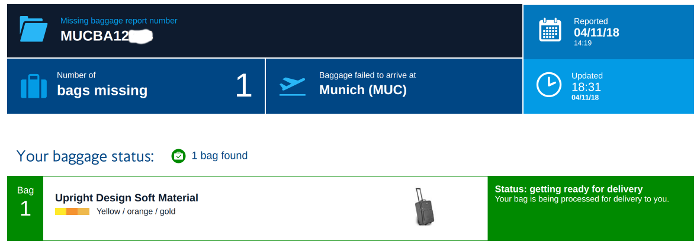

Then I got a hint of inspiration and decided to enter in my first name as my last, and voila! I had a missing bag record.

That site said they had found my bag (the agent on the phone had told me it was being “traced”) and it also asked me to enter in some more information about it, such as the brand of the manufacturer.

Of course when I tried to do that, I got an error.

Way to go there, British Airways.

Anyway, at that point I could sleep. As I write this the next morning nothing has been updated since 18:31 last night, but I hold out hope that my bag will arrive today. I travel a lot so I have a change a clothes with me along with all the toiletries I need to not offend the other conference attendees (well, at least with my hygiene), but I can’t help but be soured on the whole experience.

This year I have spent nearly US$20,000 with American Airlines (they track that for me on their website). I paid them for this ticket and they really could have been more helpful instead of just washing their hands and pointing their fingers at BA. British Airways used to be one of the best airlines on the planet, but lately they seemed to have turned into Ryanair but without that airline’s level of service. The security breach that exposed the personal information of their customers, stories like this recent issue with a flight from Orlando, and my own experience this trip have really put me off flying them ever again.

Just a hint BA – from a customer service perspective – when it comes to finding a missing bag all we really want (well, besides the bag) is for someone to tell us they know where it is and when we can expect to get it. The fact that I had to spend several hours after a long trip to get something approximating that information is a failure on your part, and you will lose some if not all of my future business because of it.

I also made the decision to further curtail my travel in 2019, because frankly I’m getting too old for this crap.

So, I’m now off to shower and to get into my last set of clean clothes. Here’s hoping my bag arrives today so I can relax and enjoy the magic that is the OSMC.