With the change in administration in the United States, Customs and Border Protection (CBP) have modified their behavior to include actions with which I don’t agree. These include forcing a US citizen to unlock his mobile device, even though it was a work device and contained sensitive information. I set out to come up with how I will deal with this situation should it arise in the future.

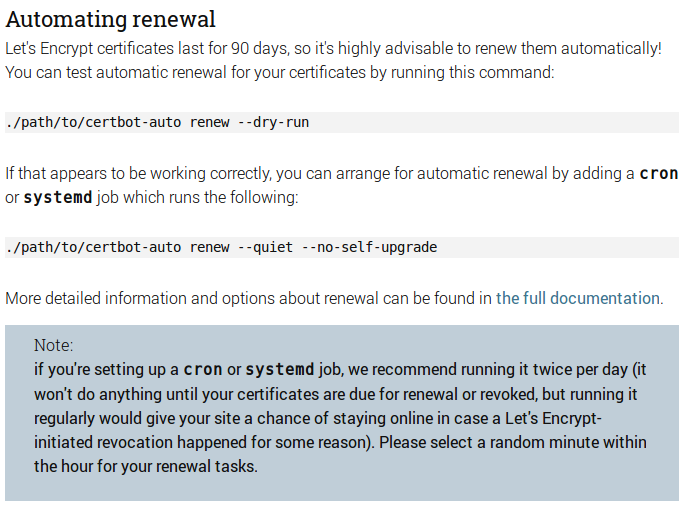

TL;DR My plan is as follows: before I enter the United States, I will generate a long, random password and set that as the encryption password for my laptop and my handy. I will then ssh into an old iMac I have on my desk, store the password in a file, and then shut the computer down. At that point I will not be able to access the information on my device until I return to the office and power on the system.

UPDATE: The EFF has published a detailed guide to help understand your rights at the border.

First off, let me say that until recently I’ve always respected CPB. They have a tough job and everyone I’ve ever met while returning from my travels has been efficient, competent and friendly.

But after the recent “Muslim Ban” fiasco I’ve come to realize that my experience is not universal. I think one of the main problems is this idea that the Constitution stops at the CBP desk, and until you are past it you really aren’t “in America” and thus the Constitution doesn’t apply.

I don’t agree with this interpretation, but it can probably be traced to the actions taken by the US government after 9/11 and the creation of the prison at Guantanamo Bay.

Prior to that, when “bad hombres” were captured by the US government, they fell into one of two categories: criminals or prisoners of war. How each class was treated was fairly well defined. Criminals were processed according to the rule of law, and the treatment of POW’s was covered under the various Geneva Conventions.

The US government decided that those two classifications were inconvenient, and so they ventured into the murky waters of “enemy combatant” and Guantanamo. Their logic goes that since Guantanamo isn’t in the US, US law doesn’t apply, and since these people aren’t members of a foreign country’s military force with which we are at war, then they aren’t POWs. So, the US gets to make up its own rules about how these people are treated.

This is dangerous for a number of reasons. Since nothing is really codified about the treatment and rights of the detainees at Guantanamo, the rules are arbitrary. Also, this opens the door for other countries such as Russia to do similar things without fear of international repercussions. The US has survived for so long because things like this are not supposed to happen, yet here we are.

This thought now extends to the border. Even though a US citizen is being questioned by another US citizen, in the role of a representative of the US government on US soil, somehow the rules of the Constitution are suspended. It’s arbitrary and I don’t buy it. The Constitution codifies a right to privacy in the Fourth Amendment, and it doesn’t go away when entering the country. And it definitely extends to mobile devices, which in today’s world are probably the most personal item people own.

So how can people like me, with almost no political power, resist this threat to our freedom?

I’ve always done little things, like opting out of millimeter wave scans at airports and getting a pat down instead (I’m not shy). If everyone did this the whole system would collapse, and they would find better ways of dealing with security than the security theater we have now. Seriously, if the Israelis don’t use it, it ain’t worth using.

When I turned to the problem of dealing with CBP, my main thoughts went to two devices that I use when traveling: my handy (mobile “phone”) and my laptop. I figured the easiest thing to do would be to just wipe them before coming into the country, but that presents some logistics problems.

For example, I could make a backup of my handy, copy it to a server at home, and then wipe it. The problem is that I have 64GB of storage on the device and I doubt I could transfer a backup in time over, say, a hotel Wi-Fi connection. One of my coworkers uses an iPhone and they thought about wiping their phone and just restoring it from iCloud when they were in the country, but then CBP could require that he turn over his iCloud password.

On my laptop I use whole disk encryption, but I thought about just rsync’ing my home directory and then deleting it before leaving, then again there is the WiFi issue and I really don’t want to have to deal with copying everything back when I’m home.

Then it dawned on me that if I didn’t know the encryption password, then I couldn’t reveal it. The problem became how to create a secure password that I couldn’t remember yet get it back when I needed it.

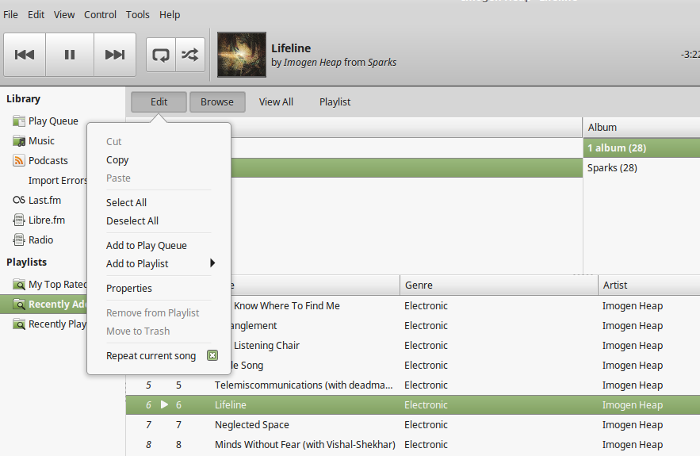

While my main desktop computer runs Linux Mint, I keep an old iMac on my desk mainly to run WebEx sessions and for those rare times I am forced to use a piece of software not available for Linux. It’s connected to the network, so I can access it remotely. But, if I can access it, I would be lying if CBP asked me for my password and I said I couldn’t retrieve it. Unlike the US Attorney General, I refuse to perjure myself.

Then it dawned on me that I could shut the iMac down remotely and have no way to turn it back on. Thus I could store a passphrase on it, retrieve it when I was back in the country, but until then I would be unable to unlock my devices.

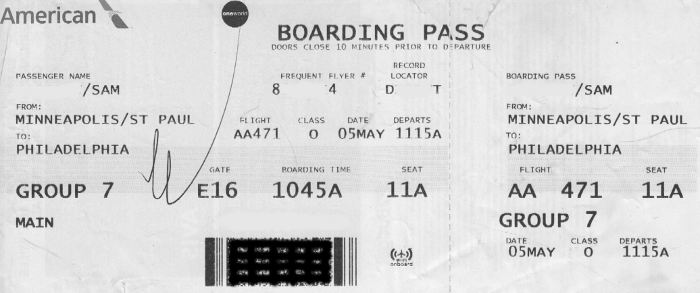

That became the plan. So, the next time I’m returning from overseas, I’ll generate a new, random password. I’ll set that as the whole disk encryption password on my laptop and the encryption password on my handy (note that this is different from the screen-lock password). This will also tie up all of my social network passwords since I use complex ones and store them on those devices. Well, with the exception of my Google account, but since I use two-factor authentication I should be safe as my handy is the device that generates the codes (and I won’t carry any of the backup codes). As long as both of those devices stay powered on, I’ll be able to use them, but once I power them off they will be useless until I get to the office, power on the iMac, and retrieve the passphrase. Note that in order to do that, I’ll be firmly in the US and anyone who wants me to unlock my devices will need a court order.

Which I would respect, unlike CBP. I think the scariest part of the whole “Muslim Ban” incident was when CBP refused to honor court orders. America is built on three branches of government, and when the Executive branch ignores the orders of the Judicial branch we are all in trouble.

I had a two other problems to address, one of which is done. If I’m in the US but my handy is locked, how would I make calls? I might need to call my ride home, etc. To that end I bought a cheap “feature” phone and I’ll just move the SIM card to it when we land.

The second issue is that while I should be on solid legal ground concerning my electronic devices, there is nothing preventing CBP from holding me for a long time. Thus the final step is to find an attorney and execute a G-28 form allowing them to represent me. I’m not sure if I need a civil rights lawyer or an immigration lawyer but I’m looking into it. My goal is to be able to notify my attorney when I am coming back into the country, and then send an SMS to them when I am through immigration. If that doesn’t arrive within two hours of my scheduled arrival, they need to come and get me.

I think the thing that bothers me the most about this whole process is the need for it. I’m not a tinfoil-hat conspiracy guy but the actions of the new government have me worried. As I use open source software almost exclusively I know I’m safer than most when it comes to surveillance, and I also don’t expect to run into any problems being an older, white male. But I’d rather be safe than sorry, and the only thing necessary for the triumph of evil is that good men do nothing.