This year I got to return to the Open Source Monitoring Conference hosted by Netways in Nürnberg, Germany.

Netways is one of the sponsors of the Icinga project, and for many years this conference was dedicated to Nagios. It is still pretty Nagios-centric, but now it is focused more on the forks of that project than the project itself. There were presentations on Naemon and Sensu as well as Icinga, and then there are the weirdos (non-check script oriented applications) such as Zabbix and OpenNMS.

I like this conference for a number of reasons. Mainly there really isn’t any other conference dedicated to monitoring, much less one focused on open source. This one brings together pretty much the whole gang. Plus, Netways has a lot of experience in hosting conferences, so it is a nice time: well organized, good food and lots of discussion.

My trip started off with an ominous text from American Airlines telling me that my flight from RDU to DFW was delayed. While flying through DFW is out of the way, it enables me to avoid Heathrow, which is worth the extra time and effort. On the way to the airport I was told my outbound flight was delayed to the point that I wouldn’t be able to make my connection, so I called the airline to ask about options.

With the acquisition by US Airways, I had the option to fly through CLT. That would cut off several hours of the trip and let me ride on an Airbus 330. American flies mainly Boeing equipment, so I was curious to see if the Airbus was any better.

As usual with flights to Europe, you leave late in the evening and arrive early in the morning. Ulf and I settled in for the flight and I was looking forward to meeting up with Ronny when we landed.

The trip was uneventful and we met up with Ronny and took the ICE train from the airport to Nürnberg. The conference is at the Holiday Inn hotel, and with nearly 300 of us there we kind of take over the place. I did think it was funny that on my first trip there the instructions on how to get to the hotel from the train station were not very direct. I found out the reason was that the most direct route takes you by the red light district and I guess they wanted us to avoid that, although I never felt unsafe wandering around the city.

We arrived mid-afternoon and checked in with Daniela to get our badges and other information. She is one of the people who work hard to make sure all attendees have a great time.

I managed to take a short nap and get settled in, and then we met up for dinner. The food at these events is really nice, and I’m always a fan of German beer.

I excused myself after the meal due in part to jet lag and in part due to the fact that I needed to finish my presentation, and I wanted to be ready for the first real day of the conference.

The conference was started by Bernd Erk, who is sort of the master of ceremonies.

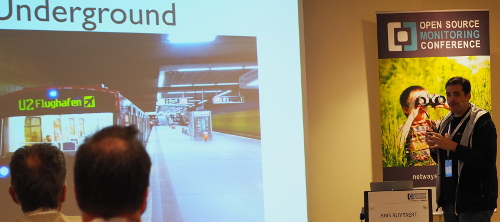

He welcomed us and covered some housekeeping issues. The party that night was to be held at a place called Terminal 90, which is actually at the airport. Last time they tried to use buses, but it became pretty hard to organize, so this time they arranged for us to take public transportation via the U-Bahn. After the introduction we then broke into two tracks and I decided to stay to hear Kris Buytaert.

I’ve known Kris through his blog for years now, but this was the first time I got to see him in person. He is probably most famous in my circles for introducing the hashtag #monitoringsucks. Since I use OpenNMS I don’t really agree, but he does raise a number of issues that make monitoring difficult and some of the methods he uses to address them.

The rest of the day saw a number of good presentations. As this conference has a large number of Germans in attendance, a little less than half of the tracks are given in German, but there was also always an English language track at the same time.

One of my favorite talks from the first day was on MQTT, a protocol for monitoring the Internet of Things. It addresses how to deal with devices that might not always be on-line, and was demonstrated via software running on a Raspberry Pi. I especially liked the idea of a “last will and testament” which describes how the device should be treated if it goes offline. I’m certain we’ll be incorporating MQTT into OpenNMS in the future.

Ronny and I missed the subway trip to the restaurant because I discovered a bug in my presentation configuration and it took me a little while to correct it, but I managed to get it done and we just grabbed a taxi. Even though it was in the airport, it was a nice venue and we caught up with Kris and my friend Rihards Olups from Zabbix. I first met Rihards at this conference several years ago and he brought me a couple of presents from Lativa (he lives near Riga). I still have the magnet on my office door.

Ulf, however, wasn’t as pleased to meet them.

We had a lot of fun eating, drinking and talking. The food was good and the staff was attentive. Ulf was much happier with our waitress (so was Ronny):

Since I had to call it an early night because my presentation was the first one on Thursday, a lot of people didn’t. After the restaurant closed they moved to “Checkpoint Jenny” which was right across the street (and under my window) from the hotel. Some were up until 6am.

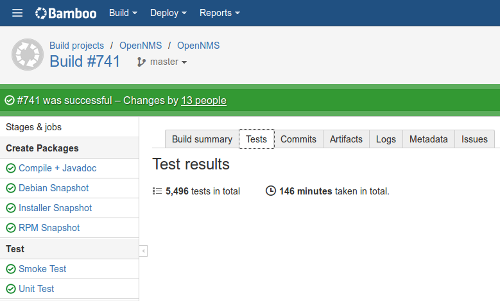

Needless to say, the crowds were a little lighter for my talk. I think it went well, but next year I might focus more on why you might want to move away from check scripts to something a little more scalable. I did a really cool demo (well, in my mind) about sending events into OpenNMS to monitor the status of scripts running on remote servers, but it probably was hard to understand from a Nagios point of view.

Both Rihards and Kris made it to my talk, and Rihards once again brought gifts. I got a lot of tasty Latvian candy (which is now in the office, my wife ordering me to get it out of the house so it won’t get eaten) as well as a bottle of Black Balsam, a liqueur local to the region.

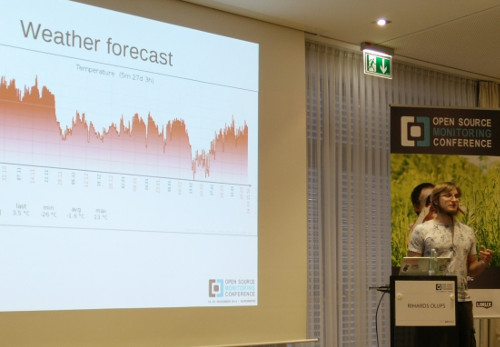

Rihards spoke after lunch, and most people were mobile by then. I enjoyed his talk and was very impressed to learn that every version of the remote proxy ever written for Zabbix is still supported.

I had to head back to Frankfurt that evening so I could fly home on Friday (my father celebrated his 75th birthday and I didn’t want to miss it) but we did find time to get together for a beer before I left. It was cool to have people from so many different monitoring projects brought together through a love of open source.

Next year the conference is from 16-18 November. I plan to attend and I hope to spend more time in Germany that trip than I had available to me this one.