It seems like our friends over at Nagios have been watching a little too much election coverage this year, and they’ve updated their “Nagios vs. OpenNMS” document with even more rhetoric and misinformation.

As my three readers may recall, back in 2011 I tore apart the first version of this document. Now they have decided to update it to target our Meridian™ version.

Let’s see how they did (please look at it and follow along as it is quite amusing).

The first misleading bit is the opening paragraph with the phrase “most widely used open-source monitoring project in the world”. Now, granted, they do indicate that means “Nagios Core” but it seems a little disingenuous since what they are selling is Nagios XI, which is much different.

Nagios XI is not open source. It is published under the “Nagios Open Software License” which is about as proprietary as they get. I’m not even sure why the word “open” was added, except to further mislead people into thinking it is open source. The license contains clauses like “The Software may not be Forked” and “The Software may only be used in conjunction with products, projects, and other software distributed by the Company.” Think about it, you can’t even integrate Nagios XI with, say, a home grown trouble ticketing system without violating the license. Doesn’t sound very “open” at all. OpenNMS Meridian is published under the AGPLv3, or a similar proprietary license should your organization have an issue with the AGPL. You don’t have that choice with Nagios XI.

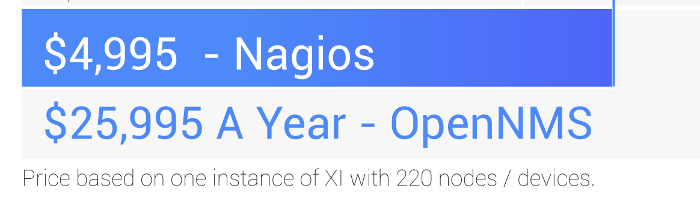

Next, let’s check out the price. The OpenNMS Group has always published its prices on-line. One instance of Meridian, which includes support in the form of access to our “Connect” community, is $6,000. They have it listed as $25,995, which is the price should you choose the much more intensive “Prime” support option. I’m not sure why they didn’t just choose our most expensive product, Ultra Support with the 24×7 option, to make them seem even better.

Also, note the fine print “Price based on one instance of XI with 220 nodes/devices”. There is no device limit with OpenNMS Meridian. So let’s be clear, for $6000 you get access to the Meridian software under an open source license versus $5000 to monitor 220 nodes with extreme limitations on your rights.

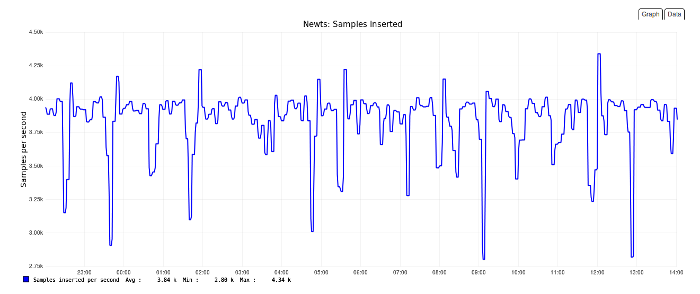

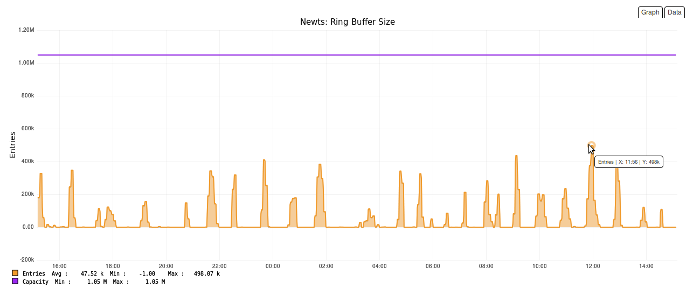

Our smaller customers tend to have around 2000 devices, which means to manage that with Nagios XI you would need roughly ten instances costing nearly $50,000 (using the math presented in this document). And from the experience we’ve heard with customers coming to us from Nagios, the reason it is limited to so few nodes is that you probably can’t run much more on a single instance of Nagios XI. Compare that to OpenNMS where we have customers with over 100,000 devices in a single instance (and they’ve been running it for years).

We also price OpenNMS as a platform. You get everything: trouble-ticketing integration, graphing, reporting, etc. in one application. It looks like Nagios has decided to nickel and dime you for logs, etc. and a thing called “Nagios Fusion” which you’ll need to manage your growing number of Nagios instances since it won’t natively scale. And remember, due to the license you are forbidden from using the software with your own tools.

I especially had to laugh at the “You Speak, We Listen” part. If you have a feature or change you need, if you ask nicely they might make it for you. With OpenNMS Meridian you are free to make any changes you need since it is 100% open source, and with our open issue tracker we address dozens of user requests each point release.

Finally, there is the feature comparison, which at a minimum is misleading and is often just blatantly false. Almost every feature marked as lacking in Meridian exists, and at a level far beyond what Nagios XI can provide. Seriously, is it really objective to state that OpenNMS doesn’t support Nagvis, a specific tool that even has “Nagios” in the name?

I had to laugh at the hubris. They obviously didn’t Google “opennms nagvis“, because, guess what? There has been an OpenNMS Nagvis integration for some time now, contributed by our community. Just in case you were wondering, we have an integration with Network Weathermap as well.

Nagios is just another proprietary software product that wants to lock you into its ecosystem, and this is just a shameful attempt to monetize an application that is long past its prime. Heck, it was the inability of the Nagios leadership to get along with others that resulted in the very popular Icinga fork, and with it Nagios lost a lot of contribution that helped make up its “Thousands of Free Add-Ons” (and the way Nagios took over the community lead plug-in site was also poorly handled). Plus, many of those add-ons won’t scale in an enterprise environment, which probably lead to the 220 device limit.

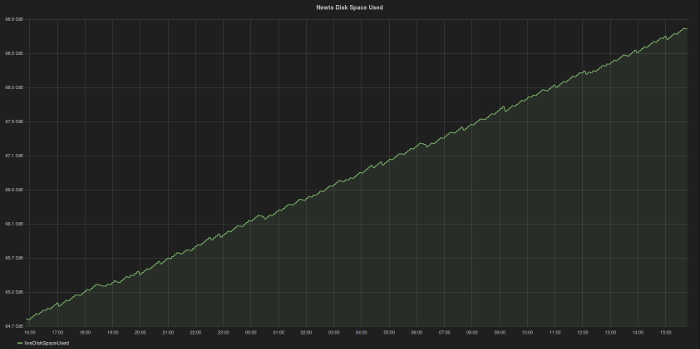

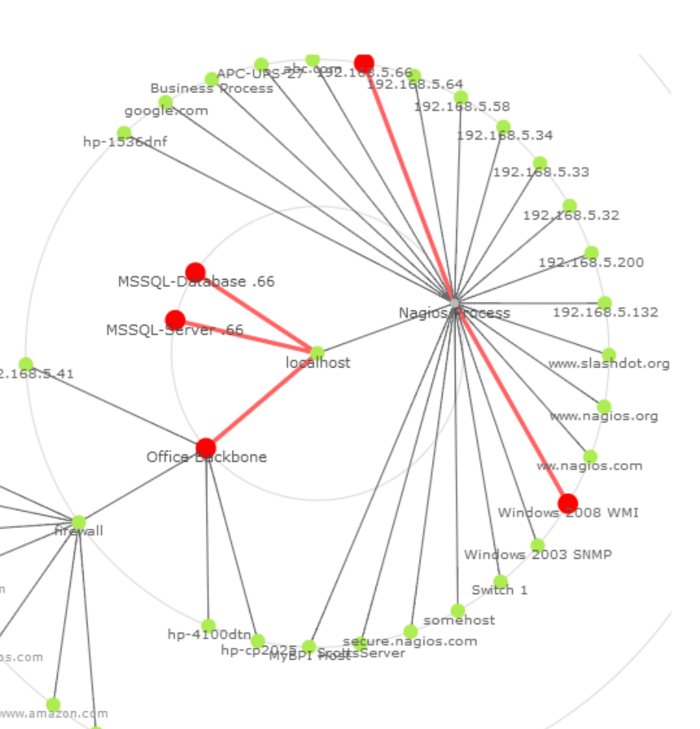

Compare that to OpenNMS. We not only want to encourage you integrate with other products, we do a lot of it for you. OpenNMS has great graphing, but we also created the first third party plug-in for Grafana. When it comes to mapping, OpenNMS is on the leading edge, with a focus on various topology views that can ultimately handle millions of devices in a fashion that is actually usable. Need to see a Layer 2 topology? Choose the “enhanced linkd view”. Run VMware and Vcenter? It is simple to import all of your machines and see them in a view that shows hosts, guests and network storage. Plus the unique ability to focus on just those devices of interest allows you to use a map with hundreds of thousands if not millions of nodes.

Compare that to the Nagios map screenshot where it looks like “localhost” is having some issues. Oh no, not localhost! That’s like, all of my machines.

As for “Business Process Intelligence” I’ve been told that the Nagios XI version is like our Business Service Monitor “Except BSM is more featureful, and has a significantly better UI/UX”. Need real Business Intelligence? OpenNMS has Red Hat Drools support, the open source leader, built right into the product.

We also support integration with popular Trouble Ticketing systems such as Request Tracker, Jira, OTRS and Remedy. And the kicker is that you can also run any Nagios check script natively in OpenNMS using the “System Execute Monitor“, but once you get used to the OpenNMS platform, why would you?

I’m not really sure why Nagios goes out of its way to spread fear, uncertainty and doubt about OpenNMS. We rarely compete in the same markets. I’m sure that Sunrise Community Banks get their money’s worth from Nagios, and for companies like NRS Small Business Solutions, Nagios might be a good fit. But if you have enterprise and carrier-level requirements, there is no way Nagios will work for you in the long term.

When a company does something like this to mislead, from wrong information about our product to using terms like “open” when they mean “closed”, it shows you what they think of their competition. What does it say about what they think about their customers?